Choosing the right LLM stack: open-source, APIs, or fine-tuning

Created Date

26 Nov, 2024

INTRODUCTION

Choosing the right LLM stack: open-source, APIs, or fine-tuning?

If you’re building an AI feature today, you’ll quickly run into a deceptively simple question:

Should we use an API model, go open-source, or fine-tune our own?

Each option has trade-offs across quality, cost, control, security, and engineering complexity.

At Arctura AI, we’ve helped teams ship LLM-powered products using all three approaches—and most mature systems end up being a mix rather than a single bet.

This article is a practical guide for product and engineering teams deciding how to choose (and evolve) their LLM stack.

1. The three main options in plain language

Before comparing them, let’s define them without jargon.

1.1 Hosted API models

You call an external provider’s API (e.g., a major LLM vendor) and send prompts + data; they return model outputs.

You get:

- Strong out-of-the-box quality

- No need to manage GPUs or scaling

- Fast access to new model versions

You trade off:

- Less control over internals and deployment

- Ongoing API costs that scale with usage

- Data governance questions, depending on provider and region

1.2 Open-source / self-hosted models

You run models such as Llama, Qwen, Mistral, etc., on your own infra (cloud or on-prem), often with custom serving and optimization.

You get:

- More control over where data lives

- Ability to customize the stack deeply

- Potential long-term cost savings at large scale

You trade off:

- Engineering overhead (serving, scaling, observability)

- Ongoing maintenance as models and hardware evolve

- Need for in-house ML and infra expertise

1.3 Fine-tuning

You train a base model further on your own data or tasks, either:

- Via a provider’s fine-tuning API, or

- On your own infra with open-source models.

You get:

- Better performance on your specific style, domain, or tasks

- More predictable outputs for structured tasks (classification, formatting, style)

You trade off:

- Need for curated training data

- Extra engineering + MLOps complexity

- Risk of over-fitting or regressions if evaluation is weak

Fine-tuning isn’t a separate “stack”—it’s a layer you can add to either API or open-source approaches.

2. How to think about the decision: five key dimensions

Instead of arguing “open-source vs closed,” it’s more productive to score your use case across five dimensions:

- Quality & capability needs

- Data sensitivity & compliance

- Latency & reliability

- Cost profile (now vs scale)

- Team & execution capacity

Let’s look at each briefly.

2.1 Quality & capability

Ask:

- How hard is the task?

- Simple classification vs multi-step reasoning, code, or complex instructions

- Do we need strong multilingual support?

- Do we care about subtle writing style, tone, or domain nuance?

For many teams, top-tier API models still win on raw capability, especially for:

- Complex reasoning

- Long-context understanding

- High-quality natural language generation

Open-source has been catching up fast, especially for shorter contexts, focused tasks, or where you can constrain the problem with good prompting and retrieval.

2.2 Data sensitivity & compliance

Key questions:

- Does any of this data leave our VPC / region?

- Are we handling PII, regulated data (health / finance), or trade secrets?

- What does our legal / security team require for vendor usage?

If policy says “no third-party model can see this data”, you’ll lean towards:

- Self-hosted open-source, or

- Private deployments of proprietary models offered in your own cloud.

If policies are more flexible—but you still care about privacy—using API models with strict enterprise terms and data controls can be acceptable and much faster to get started.

2.3 Latency & reliability

- Is this user-facing and interactive (sub-second to a few seconds), or batch / offline?

- How sensitive is the experience to occasional slowdowns?

- Do we need low latency in specific regions?

API models can be very fast, but you are subject to external network hops and shared capacity.

Self-hosting can give you:

- Tighter control over latency

- Ability to colocate models nearer to your data and services

…but only if you invest in proper serving and autoscaling.

2.4 Cost profile

Don’t just ask “which is cheaper?” Ask:

- What is our expected volume in the next 6–12 months?

- Do we expect spiky or steady traffic?

- Are we okay with Opex (API spend) or do we want to move part of it into Capex / infra?

Roughly:

- At low to moderate scale, APIs often win because you avoid infra overhead.

- At very high scale or where you can run smaller specialized models, self-hosting can be significantly cheaper per token.

Fine-tuning can improve quality and allow smaller models to replace larger ones, further reducing cost—but it adds its own training and maintenance costs.

2.5 Team & execution capacity

This is the most under-appreciated dimension.

Ask honestly:

- Do we have people who’ve run ML models in production before?

- Are we willing to maintain this stack for years?

- Is LLM infra a core competency we want to own, or a means to an end?

If your team is small, or you’re early in the product, it’s usually better to:

Buy simplicity first, then own more later if needed.

That often means starting with APIs, plus a path toward more control as you grow.

3. When to choose API-first

In many projects, we recommend starting with API models, especially for the first MVP.

Good fits:

- You’re still learning what users actually need from the AI feature.

- You care about speed to market and iteration.

- Your volume is uncertain—you might scale a lot, or not.

- You don’t have a dedicated ML infra team yet.

Advantages

- Fastest to ship and experiment

- Access to state-of-the-art models without managing GPUs

- Provider handles scaling, updates, security patches

- Easy to mix different models (e.g. smaller for routing, larger for final answers)

Watch-outs

- Monitor cost per user / per task from day one. It’s easy to lose track.

- Design your architecture to be modular: wrap calls behind interfaces so you can swap models later.

- Understand your provider’s data usage and retention policies clearly.

Rule of thumb:

If you’re pre-product/market fit or still validating the AI feature, API-first is almost always the right move.

4. When to go open-source / self-hosted

Self-hosting starts to make sense when:

- Data can’t leave your environment for legal or security reasons, and

- You have (or are willing to build) the infra and ML capabilities to operate models, or

- Your usage is high and predictable enough that infra costs will be meaningfully lower than API spend.

Advantages

- Full control over where data and logs live

- Ability to customize serving stack, quantization, routing, etc.

- Potentially lower marginal cost at scale

- Freedom to experiment with niche or specialized models

Costs and risks

- Need to manage deployment, scaling, monitoring, and upgrades

- Responsible for security, patching, and incident response

- Risk of getting stuck maintaining infra that’s not core to your product

In practice, many teams adopt a hybrid approach:

- Self-host a mid-size open-source model for most tasks

- Use a hosted frontier model only for the hardest cases, or as a backup

This balances control and cost with access to top-tier capability when needed.

5. When (and how) to fine-tune

Fine-tuning is powerful but should rarely be step one.

Good signs you might benefit from fine-tuning:

- You see consistent failure patterns that prompt engineering + retrieval can’t fix.

- You need the model to follow very specific formats or styles reliably.

- You want to replace a large generic model with a smaller specialized one for cost/latency reasons.

- You have (or can create) high-quality labeled examples of desired behavior.

Fine-tuning via API vs self-hosted

- Fine-tuning an API model

- Simpler to start, minimal infra changes

- Training and hosting still managed by the provider

- Great when you already rely on that provider and just need specialization

- Fine-tuning open-source

- More control and potential cost savings at scale

- Requires MLOps: training pipelines, experiment tracking, versioning

- Best when you already operate models yourself

Critical success factors

- Good training data (diverse, representative, not noisy)

- A clear baseline and evaluation set to compare against

- Governance and safe rollout (A/B tests, gradual traffic shifting)

Rule of thumb:

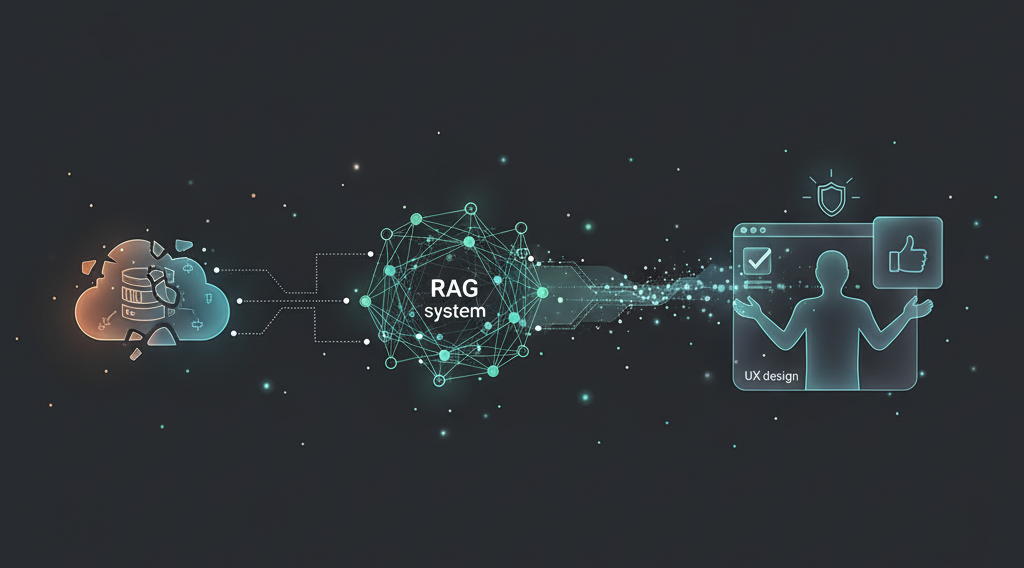

Exhaust prompting, system design, and RAG improvements first. Reach for fine-tuning when you understand the problem well enough to encode it in data.

6. A simple decision path

Here’s a no-nonsense way to decide where to start:

- Is sending data to a third-party model strictly forbidden?

- Yes → start with self-hosted / private-cloud models (open-source or vendor-managed)

- No → go to step 2

- Are you pre-MVP or still validating user value?

- Yes → start API-first, design for swappability

- No → go to step 3

- Is your projected usage large enough that API costs will become painful soon?

- Yes → explore hybrid: open-source for common paths, API for hard cases

- No → stay with APIs and optimize prompts, caching, and calling patterns

- Are we seeing systematic failures a better model won’t easily fix?

(e.g., style, formatting, domain-specific jargon)- Yes → consider fine-tuning (API-based first, then self-hosted if needed)

- No → invest more in RAG, UX, and evaluation before fine-tuning

7. Evolving your stack over time

Think of your LLM stack as a roadmap, not a one-time decision.

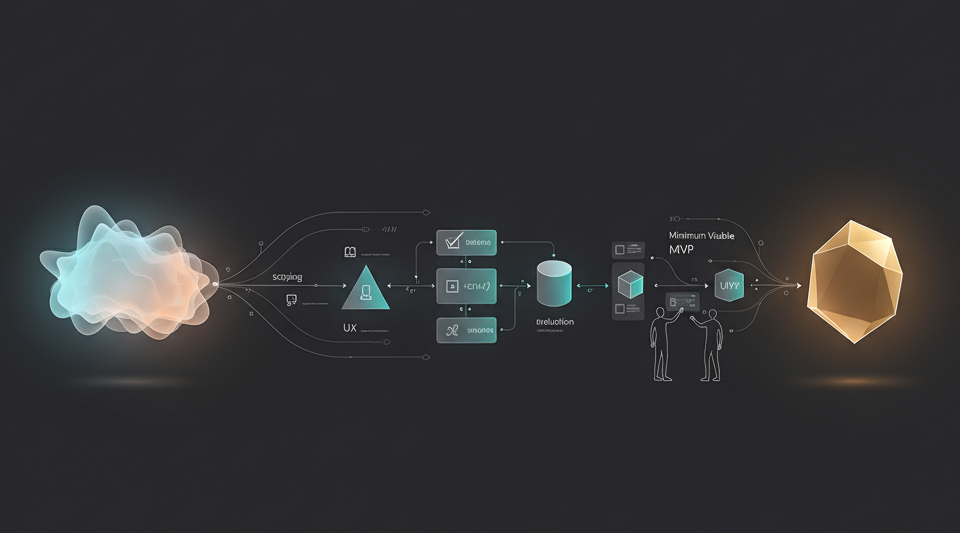

A realistic evolution might look like:

- Phase 1 – Learn fast

- API models only

- Focus on UX, retrieval, and understanding user value

- Phase 2 – Optimize & specialize

- Introduce fine-tuning for key tasks

- Add caching, routing, and lightweight evaluation

- Start experimenting with open-source where it’s a clear win

- Phase 3 – Scale & own more

- Operate a mix of self-hosted models + selective APIs

- Mature observability, governance, and incident response

- Continuously refine which tasks go to which model

The “right” stack is the one that fits your stage, constraints, and ambitions—and that can evolve as your product and team grow.

Closing thoughts

Choosing between APIs, open-source, and fine-tuning isn’t about ideology. It’s about:

- What your users need

- What your organization can safely operate

- Where you’ll get the best ratio of value to complexity over the next 6–18 months

If you’re weighing options for your own LLM stack—or want to sanity-check a planned migration—our team at Arctura AI is always happy to help you map trade-offs and design a stack that fits your reality, not someone else’s architecture diagram.

.svg)