From AI idea to MVP: a practical checklist for product teams

Created Date

26 Nov, 2024

INTRODUCTION

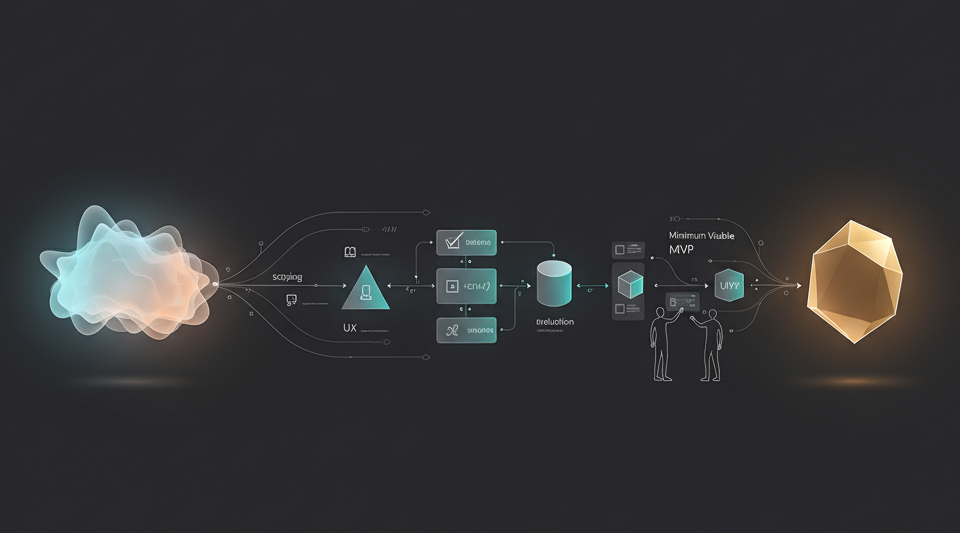

From AI idea to MVP: a practical checklist for product teams

AI ideas are easy.

Shipping something real—on time, within budget, and actually useful—is the hard part.

At Arctura AI, we’ve helped product teams turn rough AI concepts into working MVPs across RAG, chatbots, vision, and analytics. Over time, we noticed that successful projects tend to follow the same pattern.

This article is a practical checklist you can use to go from “We should add AI” to a shippable MVP with much less guesswork.

1. Clarify the problem, not the model

Before talking about GPTs, embeddings, or diffusion models, answer:

- Who is the primary user?

(role, team, experience level) - What task are they doing today without AI?

- Where is the pain?

(too slow, too manual, too error-prone, too expensive) - How will we know this is successful?

(time saved, fewer errors, more conversions, better NPS…)

Write one sentence in this format:

“For [user] who [painful task], we want AI to [outcome] so that [business impact].”

If you can’t write that clearly, you’re not ready to choose a model yet.

✅ Checklist

- Primary user and workflow documented

- Pain points and success metrics agreed with stakeholders

- Non-goals written down (what this MVP will not do)

2. Decide what “MVP” actually means

Many AI projects fail because “MVP” quietly grows into “version 2.5 of the dream product”.

Keep the first release narrow:

- One core user journey

e.g. “answer questions on internal docs” or “generate product descriptions from a template”. - One or two high-value use cases

e.g. “top 20% most frequent questions”. - A small, realistic scope

what can be built and iterated on in 4–8 weeks, not 6 months.

For the MVP, prefer:

- Quality over coverage – it’s better to delight one scenario than be mediocre at ten.

- Human-in-the-loop where stakes are high (review, approve, edit) instead of full automation.

✅ Checklist

- MVP scope defined in terms of user journey, not screens

- Out of scope items documented (for later releases)

- Target timeline (e.g. 4–8 weeks) agreed

3. Map data and constraints early

Before any modeling, make sure you understand:

- Data sources

Where does the relevant information live? (docs, tickets, CRM, warehouse, images…) - Access and permissions

Can we legally and technically use this data for AI? Any PII / compliance issues? - Update frequency

How often does the data change? (real-time vs nightly vs static) - Latency & reliability requirements

Is this for offline analysis, or in-the-loop user interactions?

This will often change your approach. For example:

- If data is messy and siloed, the first MVP might focus on one clean department instead of the whole company.

- If latency must be low, you may need smaller models, caching, or pre-computation.

✅ Checklist

- List of data sources and owners

- Access granted for at least one “pilot” dataset

- Basic data quality issues identified

- Latency, uptime, and privacy constraints captured

4. Choose the simplest architecture that can work

Now we can talk about models and infra—but with constraints in mind.

Key principles:

- Start from the simplest design that can solve the problem.

- Prefer configurable components (prompt templates, routing rules, retrieval settings) over hard-coded logic.

- Design with cost and observability in mind from day one.

Examples:

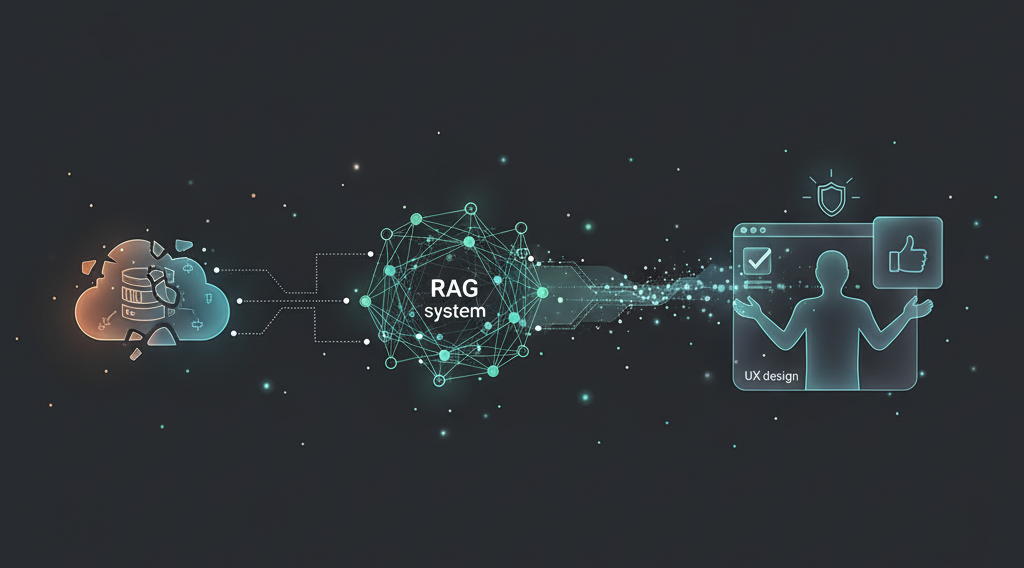

- For a document Q&A tool:

- Start with RAG + a single well-chosen LLM, hybrid retrieval, basic reranking.

- Add agents, tools, or complex orchestration only if necessary.

- For content generation:

- Use templates + prompt engineering + style guides before jumping to fine-tuning.

- Add specialized models only if generic ones can’t hit the target quality.

✅ Checklist

- Sequence diagram or architecture sketch created

- Model choices justified against constraints (quality, cost, latency, risk)

- Clear plan for monitoring usage, latency, and failures

5. Design the UX around trust and control

An AI MVP isn’t just about what the model can do; it’s about what the user feels safe doing with it.

Good UX makes AI:

- Legible – users see why it responded that way

- Editable – users can correct or refine output

- Bounded – users know what it can and cannot do

Practical ideas:

- Show sources and confidence cues for generative answers.

- Make it one click to edit, retry, or narrow the query.

- For generators, offer presets / templates instead of a blank text box.

- For decision support, keep a clear line: AI suggests, human decides.

✅ Checklist

- UX mockups reviewed with real users

- States defined for “no answer / low confidence / errors”

- Editing and feedback mechanisms included from v1

6. Build evaluation into the MVP

If you don’t measure, you can’t improve—and AI systems are especially prone to “demo looks great, production feels off”.

For an MVP, keep evaluation light but deliberate:

- Offline set:

20–50 real examples (inputs + ideal outputs) for spot-checking changes. - Online signals:

- Thumbs up / down, quick rating

- Edit distance (how much users rewrite generated text)

- Task completion time or drop-off rate

- System metrics:

Latency (P50 / P95), error rates, cost per call or per task.

Don’t chase perfect metrics from day one. Focus on:

“Is this clearly better than the status quo for our MVP use case?”

✅ Checklist

- Small but real evaluation set agreed with stakeholders

- Success metrics defined (quant + qual)

- Logging and basic dashboards planned

7. Plan for iteration after launch

An MVP is not the end; it’s the beginning of learning.

Before launch, decide:

- Who owns the AI feature after v1? (product + engineering + data)

- How frequently you’ll review feedback and metrics (weekly / bi-weekly)

- What knobs you can turn safely without a full redeploy

(prompts, thresholds, routing, retrieval params…)

Good questions to revisit regularly:

- Are we seeing new user behaviors we didn’t design for?

- Where do people abandon, override, or ignore the AI?

- Are costs staying aligned with value as usage grows?

✅ Checklist

- Post-launch owner and review cadence agreed

- Backlog seeded with v1 learnings and “nice to haves”

- Rollback / safe-mode strategy in place

Closing thoughts

Going from AI idea to MVP doesn’t have to be chaotic.

If you:

- Nail the problem and user

- Keep the scope small and valuable

- Respect data and constraints

- Choose simple, observable architectures

- Design for trust and control

- Build in evaluation from the start

- Commit to steady iteration

…you dramatically increase your odds of shipping something that your users will actually adopt—and that your business will want to scale.

If your team is exploring an AI MVP and wants a second pair of eyes on feasibility, architecture, or cost, the Arctura AI team is always happy to help.

.svg)